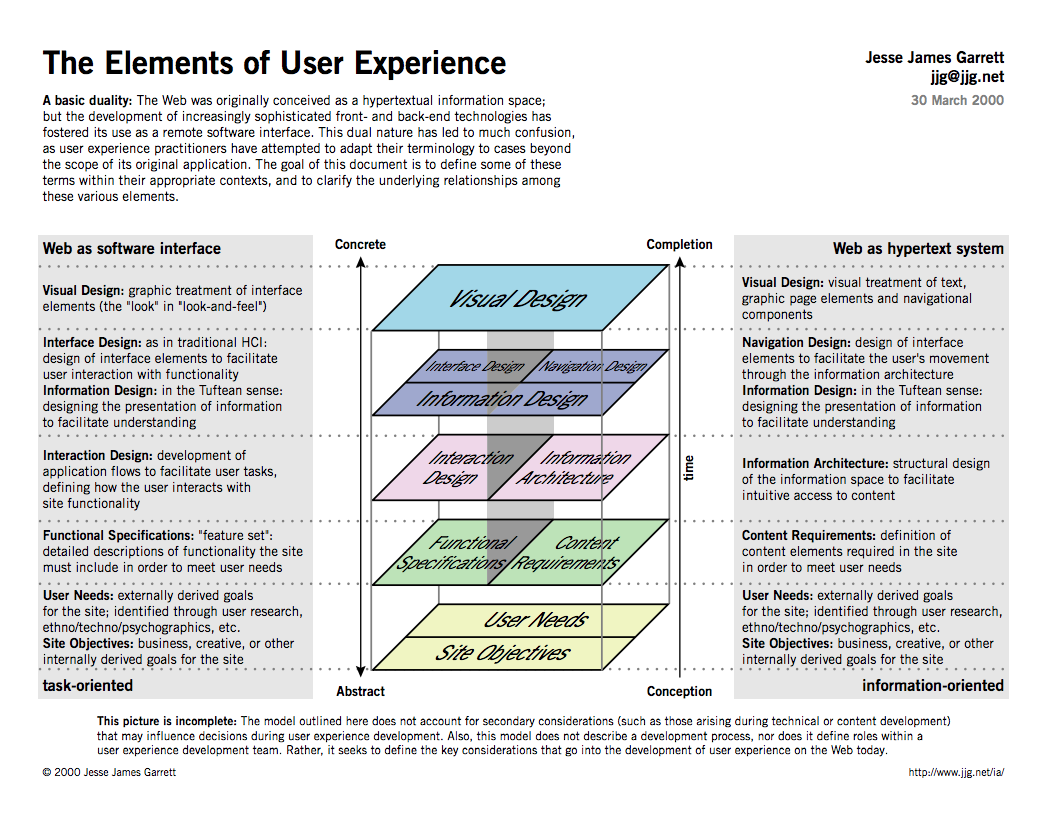

I chose to write about Jesse James Garrett because, frankly, I do quite a bit of UI/UX work and I wasn't familiar with him or The Elements of User Experience. The graphic below was featured in our reading and I found it interesting for several reasons:

- It's an informative breakdown on both the later of software interface and the pipeline for web development.

- In doing the above, the image defies conventions by having the same illustration serve as a map for multiple processes.

The graphic is from Garrett's book, The Elements of User Experience: User-Centered Design for the Web and, according to his website, serves as visual abstract of the book.

This is a great primer and I think I'll use this image to facilitate conversation about the development of UI/UX in my own classes. Terms like user experience, interface design and interaction design are often used interchangeably and this is a good breakdown on their differences. I could see this being particularly useful in developing common language for a development team or when establishing a relationship with a new client.

I love that this doesn't start with a flowchart, progress to wireframes, prototypes and production. Rather, the project pipeline begins much earlier, in the concept development state. It reminds me of something our guest teacher in Data Structures said last night, that 80% of data management is cleaning and parsing. Similarly, product/web development requires an enormous amount of discussion just to set perimeters, define goals and values, understand the definition of success, etc.

While this graphic is great at describing parallel flows I wish it addressed the idea of feedback but perhaps that's too much to ask from a single image. And within the domain of web development feedback is largely constrained to button states and transitions.

I'd like to expand on this system to see how these 'steps' actually manifest in a production.

I have an particular interest in game development so I connected the above graphic to diegesis theory and would love to work through, with my students and clients, the exercise of determining when to plan for the UI representations below.

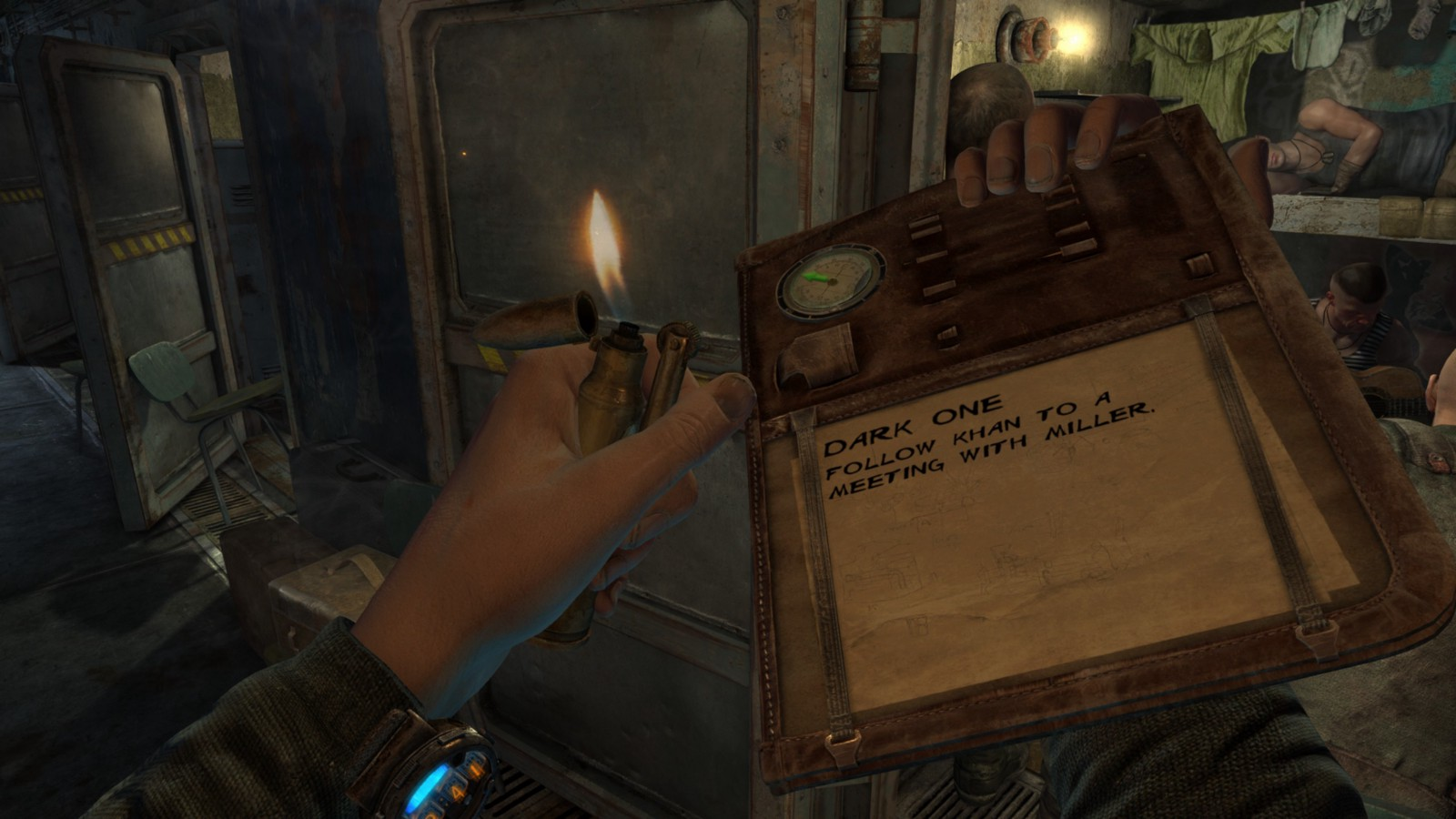

Diegetic Components

Diegetic components are typically featured within the game world as things the player’s avatar, and other characters, are aware of.

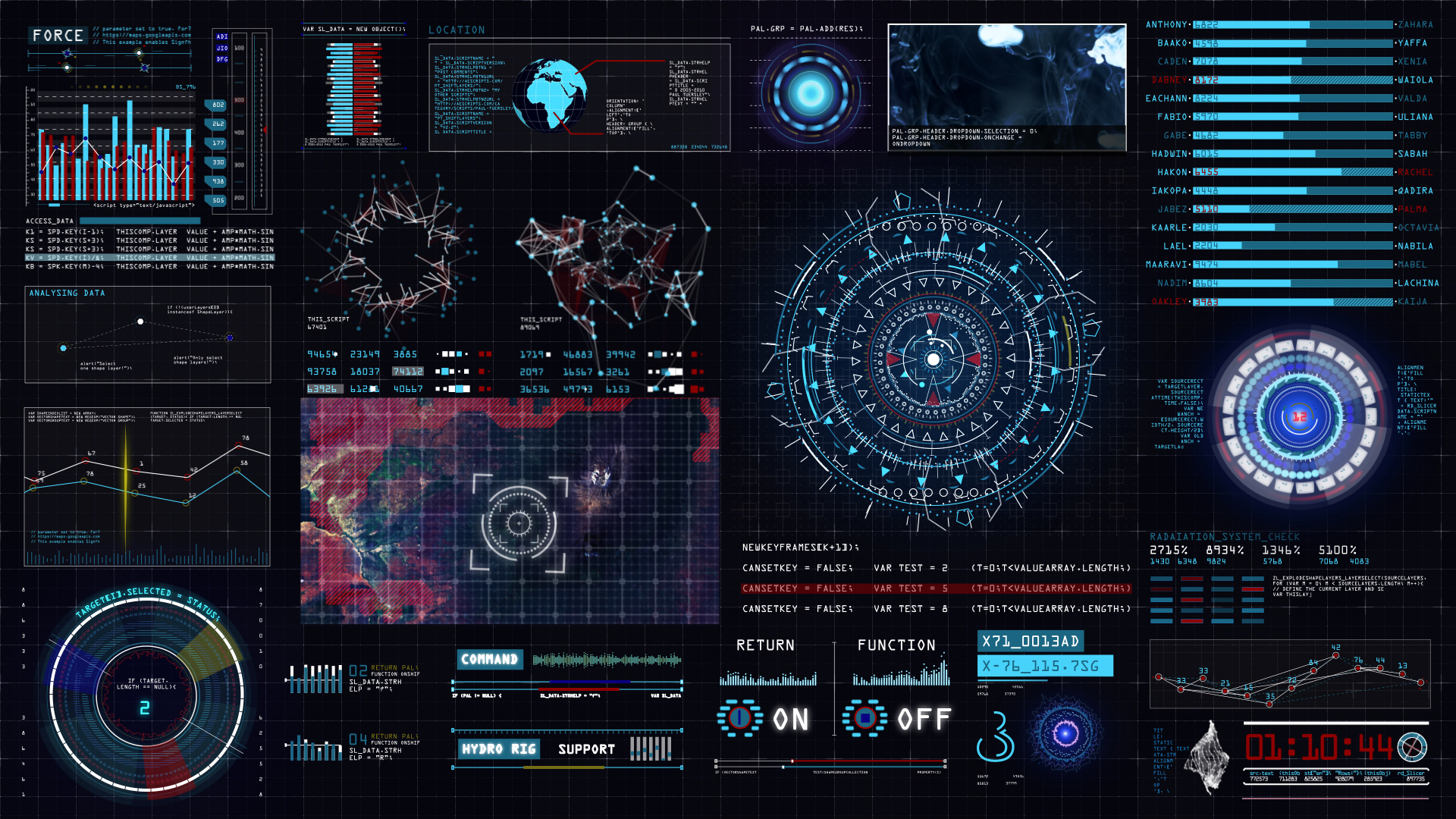

Non-Diegetic Components

The interface component is neither in the game story or a component in the game space. A heads up display (HUD) is the most common manifestation. It's efficient, doesn't interfere with the game space and can be skinned to support the aesthetic without appearing in-world. Often used for quick access to critical information but also used for embedded menu systems. Need to check your quests? You're usually navigating to this via a non-diegetic UI component.

Spatial Components

The interface component is NOT in the game world but DOES appear in the game space. Meaning, the characters in the game space are not aware of them but they communicate some state change within the environment.

Often used to communicate area of effect and what is within an NPC's field of vision.

Meta Components

Interface components that are in the game story but NOT in the game space. Often these are clues to a character state but lack the clarify of a HUD component. For example, a meta component of blood splatter on the screen might communicate that the player is hurt but not how close the player is to dying.